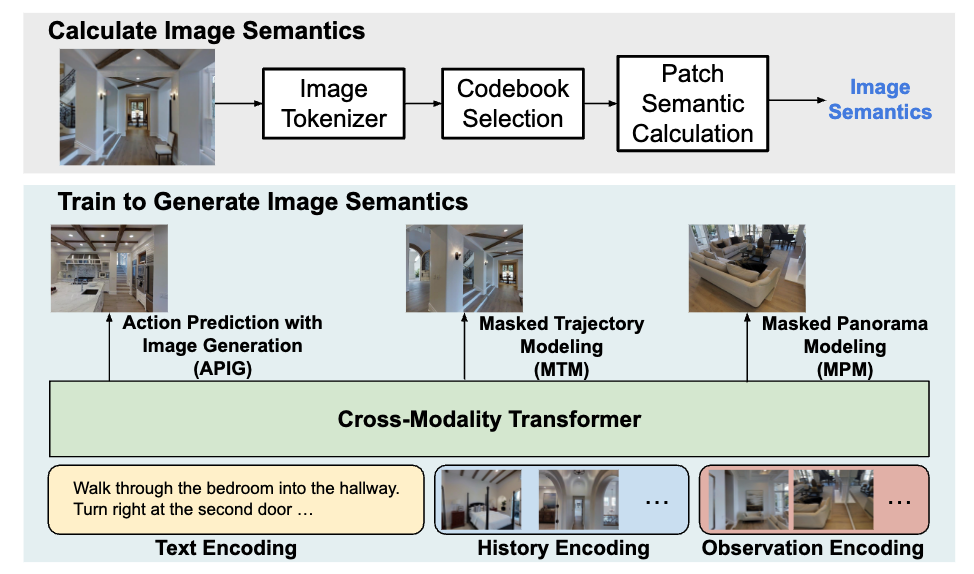

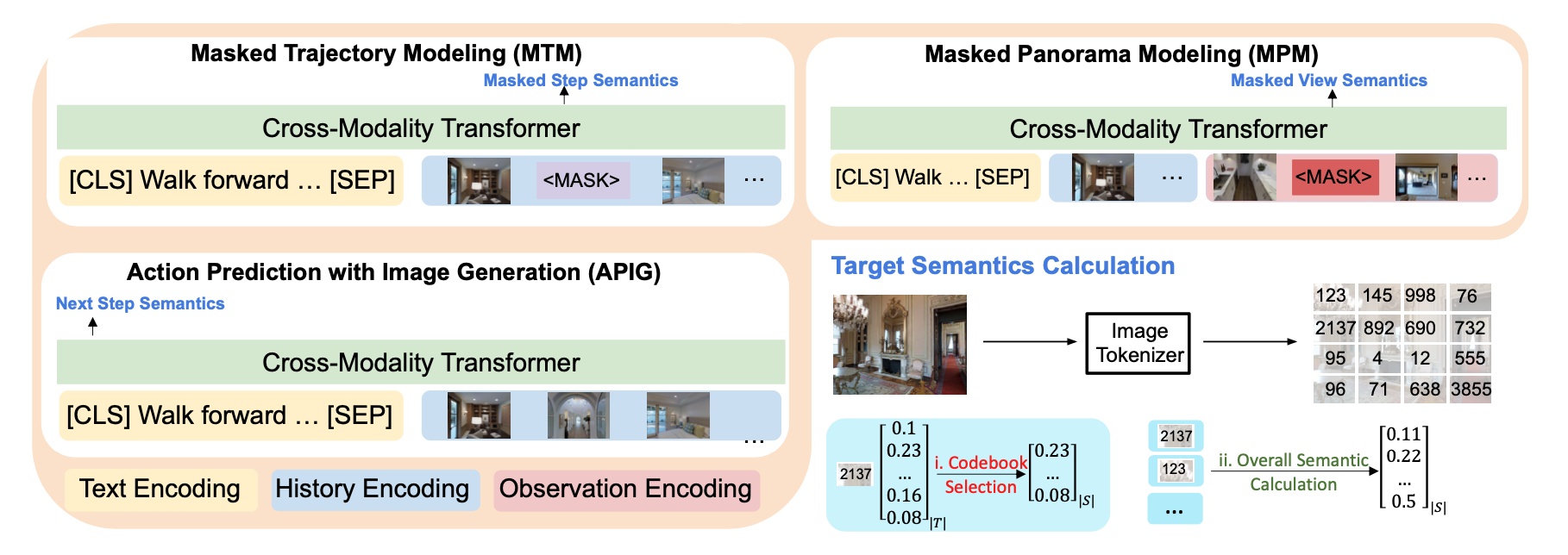

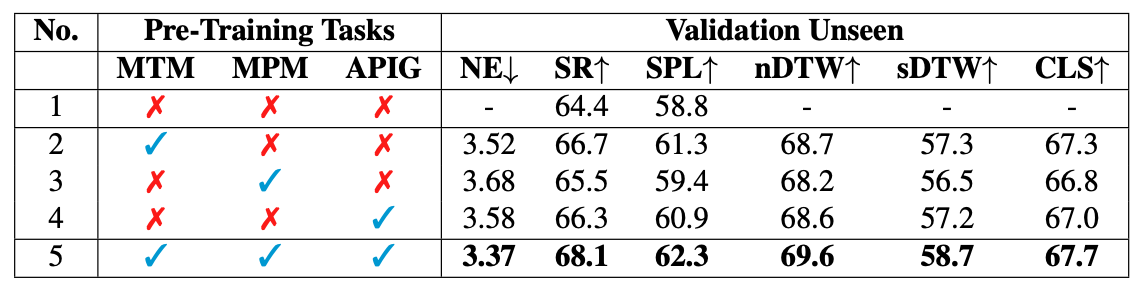

Vision-and-Language Navigation (VLN) is the task that requires an agent to navigate through the environment based on natural language instructions. At each step, the agent takes the next action by selecting from a set of navigable locations. In this paper, we aim to take one step further and explore whether the agent can benefit from generating the potential future view during navigation. Intuitively, humans will have an expectation of how the future environment will look like, based on the natural language instructions and surrounding views, which will aid correct navigation. Hence, to equip the agent with this ability to generate the semantics of future navigation views, we first propose three proxy tasks during the agent's in-domain pre-training: Masked Panorama Modeling (MPM), Masked Trajectory Modeling (MTM), and Action Prediction with Image Generation (APIG). These three objectives teach the model to predict missing views in a panorama (MPM), predict missing steps in the full trajectory (MTM), and generate the next view based on the full instruction and navigation history (APIG), respectively. We then fine-tune the agent on the VLN task with an auxiliary loss that minimizes the difference between the view semantics generated by the agent and the ground truth view semantics of the next step. Empirically, our VLN-SIG achieves the new state-of-the-art on both Room-to-Room dataset and CVDN dataset. We further show that our agent learns to fill in missing patches in future views qualitatively, which brings more interpretability over agents' predicted actions. Lastly, we demonstrate that learning to predict future view semantics also enables the agent to have better performance on longer paths.

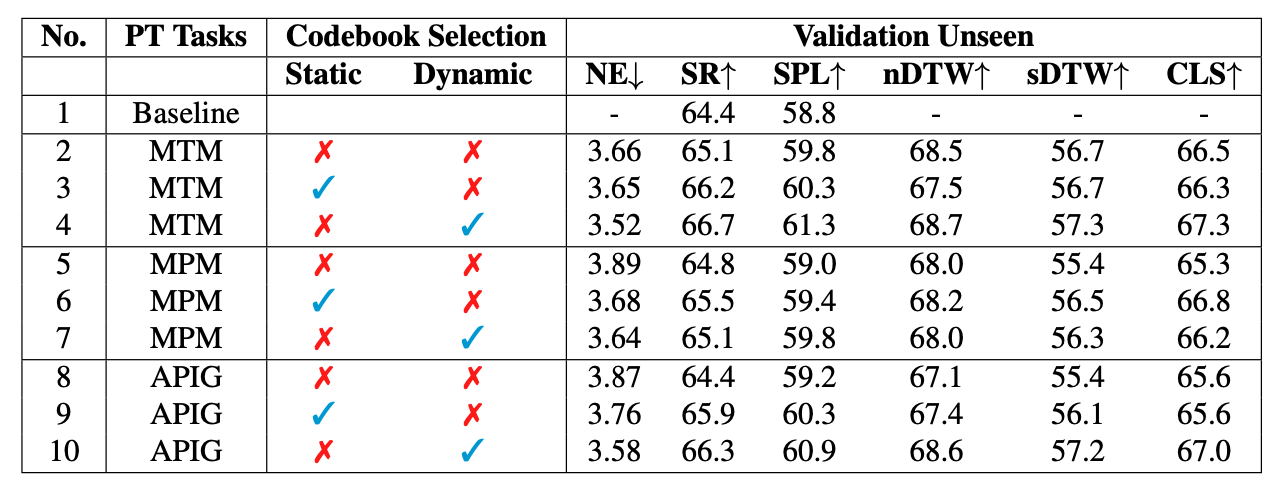

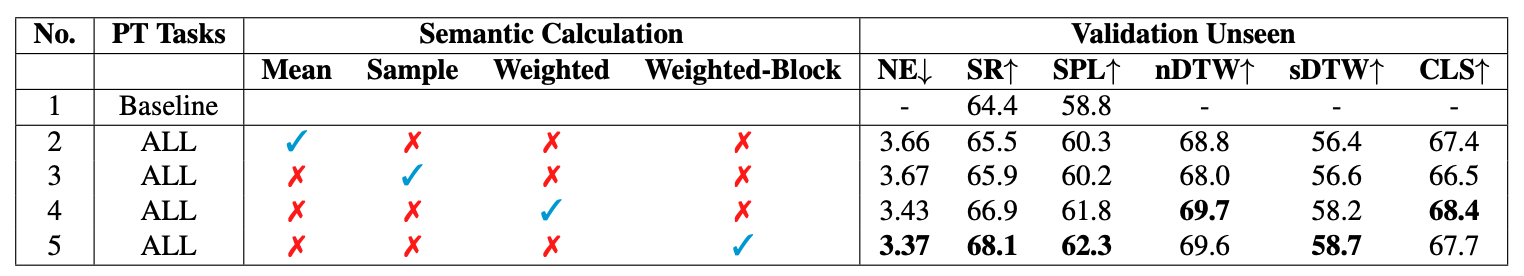

We first calculate the overall image semantics with a weight function after filtering the visual vocabulary with dynamic codebook selection method. Then, we pre-train the VLN agent with three proxy tasks to learn the image semantic information. Specifically, Masked Trajectory Modeling predicts the missing image semantics in a given navigation trajectory based on instructions. Masked Panorama Modeling predicts the image semantics of the missing views in a panorama. MTM and MPM together help the agent learn image semantics in both temporal and spatial space. Action Prediction with Image Generation mimics the action prediction process and predicts the image semantics of the next step. Lastly, we fine-tune the navigation agent on navigation loss and APIG loss.

| Model | R2R | CVDN | ||||

|---|---|---|---|---|---|---|

| Val Unseen | Test | Val Unseen | Test | |||

| SR | SPL | SR | SPL | GP | GP | |

| PREVALENT | 58 | 53 | 54 | 51 | 3.15 | 2.44 |

| Rec-BERT | 63 | 57 | 63 | 57 | - | - |

| HAMT | 66 | 61 | 65 | 60 | 5.13 | 5.58 |

| Ours | 68 | 62 | 65 | 60 | 5.52 | 5.83 |

| DUET* | 72 | 60 | 69 | 59 | - | - |

| Ours* | 72 | 62 | 72 | 60 | - | - |

Comparison with state-of-the-art agents on Room-to-Room (R2R) and Cooperative Vision-and-Dialog Navigation (CVDN) validation unseen set and test leaderboard. * denotes agent that utilizes graph information during navigation.

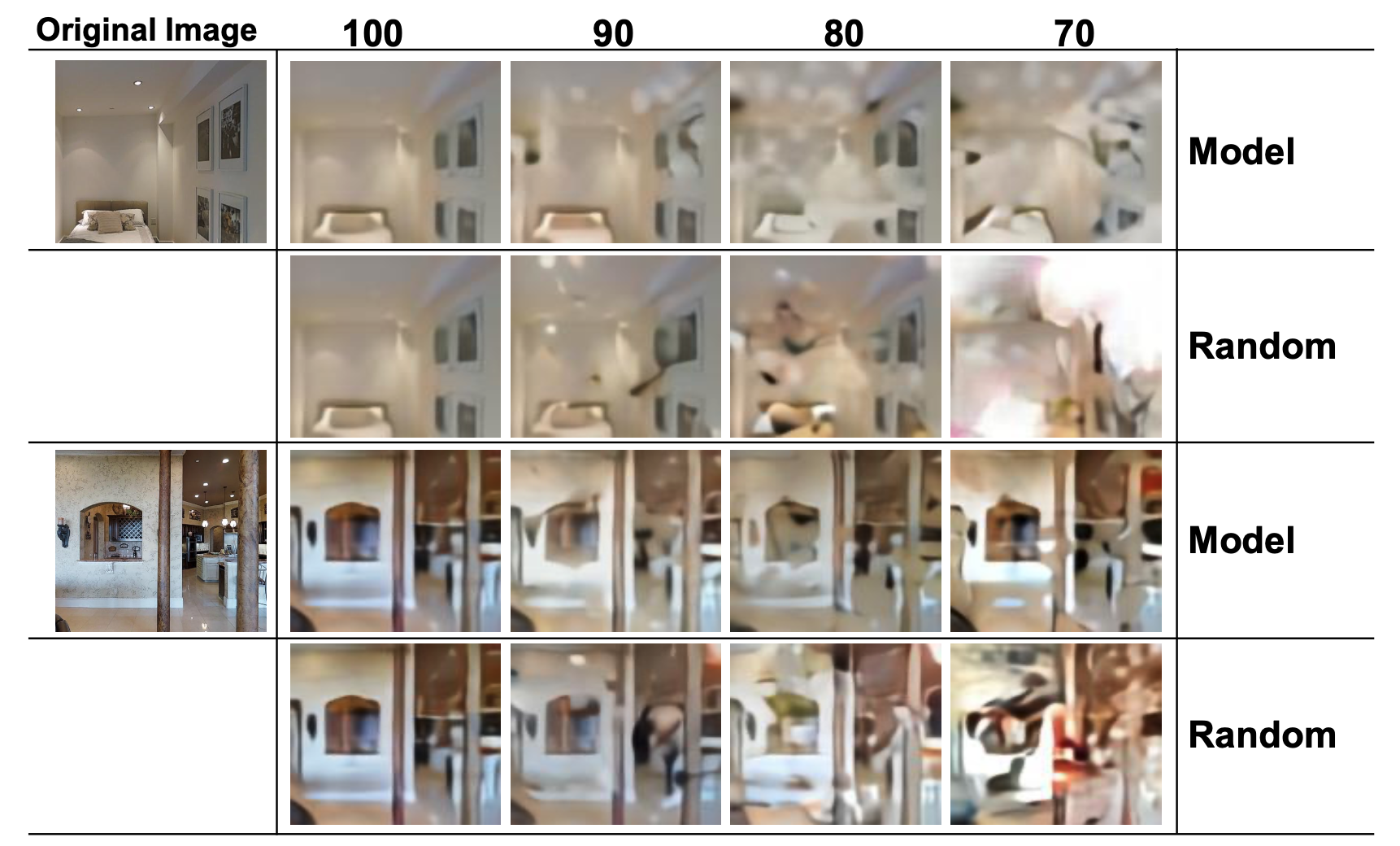

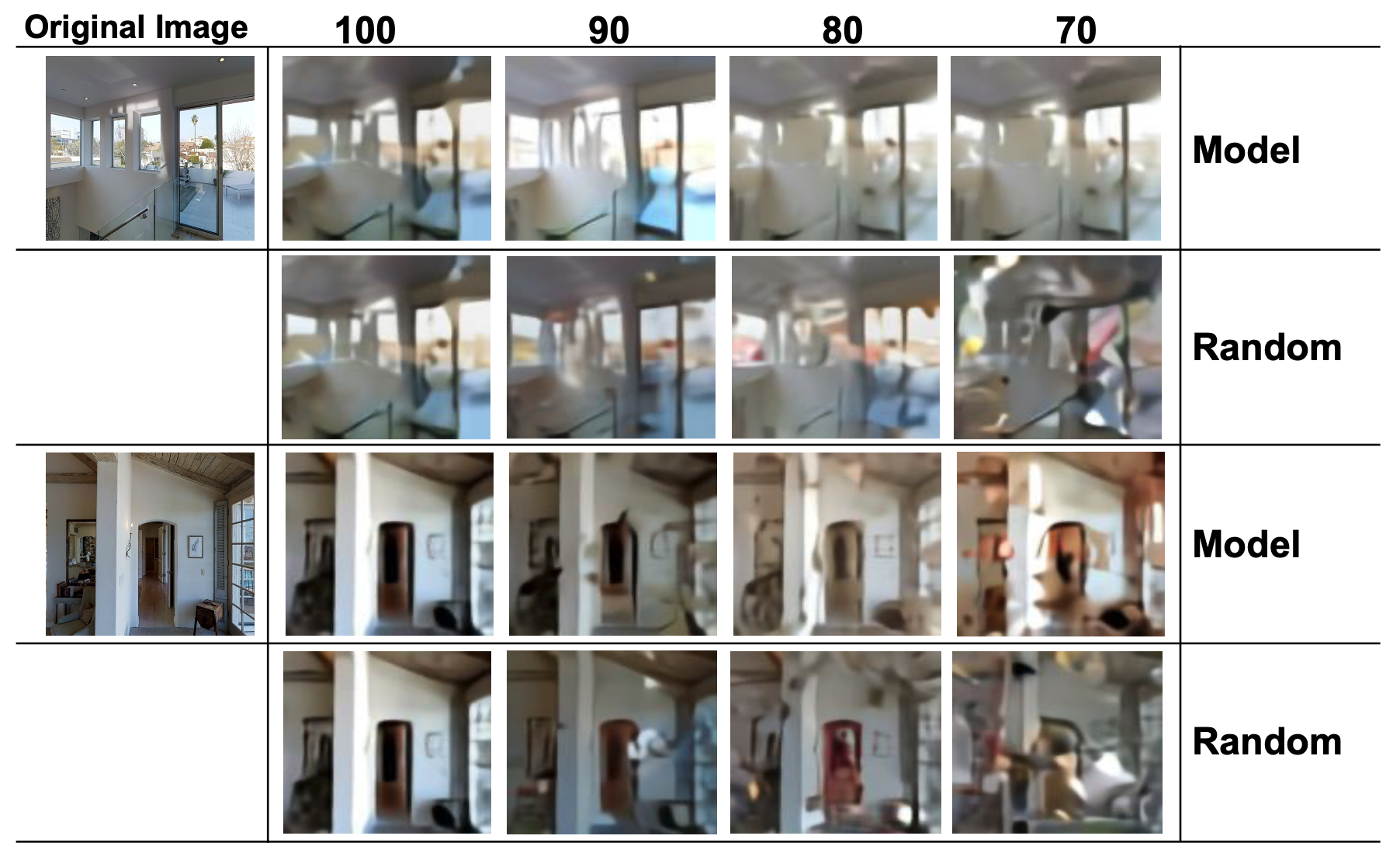

Our model could reasonably generate future semantics and reconstruct future images. We generate the token for each patch in the image with our APIG head learned with weighted patch probability. We set the weight vector to be an indicator vector, where the weight for the target patch is 1 and the others are 0. We use x% (x∈{70, 80, 90}) of the patches with ground truth tokens encoded with pre-trained dVAE, the rest patches with the tokens generated by our APIG head, and then use the dVAE decoder to decode the image. Our generated image could almost reconstruct the beds and pictures in the original image with small vague areas when given 70% of the ground truth tokens. In comparison, filling the 30% patches with random tokens will generate distorted images with large white parts, and the beds and pictures information cannot be identified. We also notice that our model still fails to generate the full image when all the tokens in the image are predicted by our APIG head, and needs at least 70% of the ground truth tokens to generate images of reasonable quality.

@article{li2023vln-sig,

author = {Jialu Li, and Mohit Bansal},

title = {Improving Vision-and-Language Navigation by Generating Future-View Image Semantics},

journal = {CVPR},

year = {2023},

}